Nearly a year and a half ago, I wrote an article about the dangers of and ethical concerns posed by the surge in popularity of art generated by artificial intelligence (AI). In it, I called for more regulation of AI, but overall took a fairly moderate stance on the issue, conceding that AI has applications in creative fields and that fears regarding AI could be overblown.

Now, AI has progressed even further, and problems emerging are primarily attributable to the people in charge of developing it and rolling it out to the public. Having a background in computer science, I stand by what I said in my previous article regarding how fascinating and incredible AI technology is. That being said, the road I see AI going down currently is quite dark, and the root of the issue is capitalism.

Those responsible for the creation of AI should have two concerns at the forefront of their mind. First, they should ensure that what they’re developing is used for good, and second, they should mitigate or remove the possibility of their technology being used nefariously. The central complication, however, is that AI development is predominantly manufactured by big tech companies, primarily by the usual suspects including Microsoft and Google, but also by newcomers such as OpenAI, which Elon Musk co-founded.

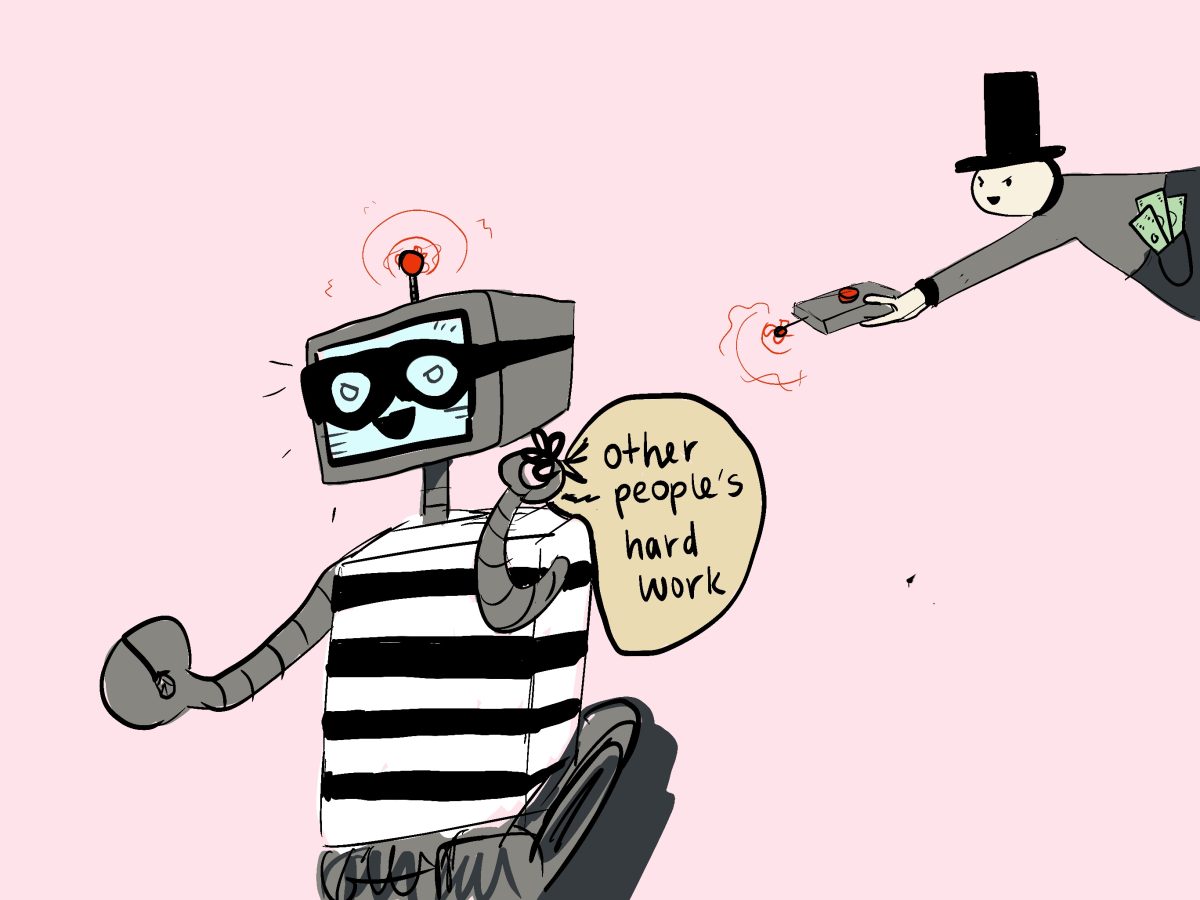

The centrality of corporations in AI development is a massive problem because the foremost concern of tech companies, or any company for that matter, is to generate profit as opposed to promoting an ethical usage of their products. And thus far, the most profitable way for tech corporations to make money off of AI has been to roll it out to consumers without a moment’s consideration for the implications of doing so.

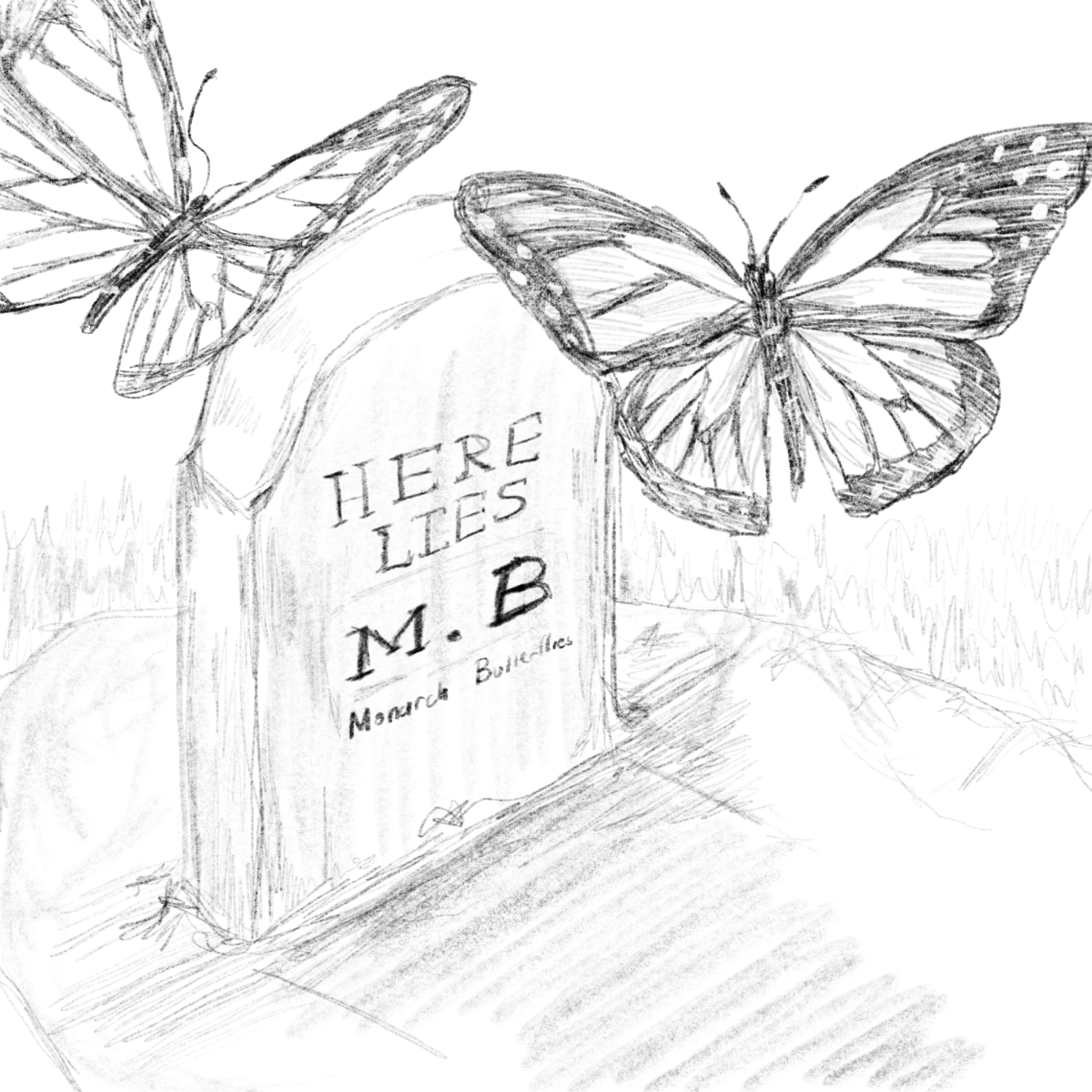

The negative effects of this are already clear and are getting worse all the time. Artists’ work is being routinely fed into AI algorithms without consent, posing a real risk of artists being automated out of an already competitive and low-paying field. People’s work, voices and very likenesses are being stolen from them and used in ways they never intended, with a horrifying and high-profile example being last month when Taylor Swift became one of many people to have AI-generated sexually explicit images of them circulate online.

The images of Swift in particular seem to have been generated using Microsoft Designer. While Microsoft claims to have closed the loophole in their software that made it possible to generate these images in the first place, the company is still missing the point that whatever particular “loophole” allowed this to happen is not the main problem here.

The main problem is that extremely powerful technology is being rolled out by companies who either don’t know or don’t care how their software could be used nefariously. Tech companies seem content to let the ramifications of their software play out in real time, without regard to the lives that could be drastically affected by their negligence. After all, it’s much more profitable to roll the technology out now and work out the kinks by testing it on real people, rather than spending the resources to make sure nobody gets hurt in the first place.

In many ways, AI’s issues are a reflection of the larger issue that our society doesn’t value consent or human dignity very much, or in some cases not at all (especially for women). To tackle a smaller problem, though, my perfect world solution to AI would be to nationalize the entire tech industry. Considering that we live in hell, however, I would instead humbly suggest that the geriatric amalgamation of people who are in charge of us for some reason climb out of the pockets of tech giants and pass some legislation that heavily regulates AI and how it can be used.